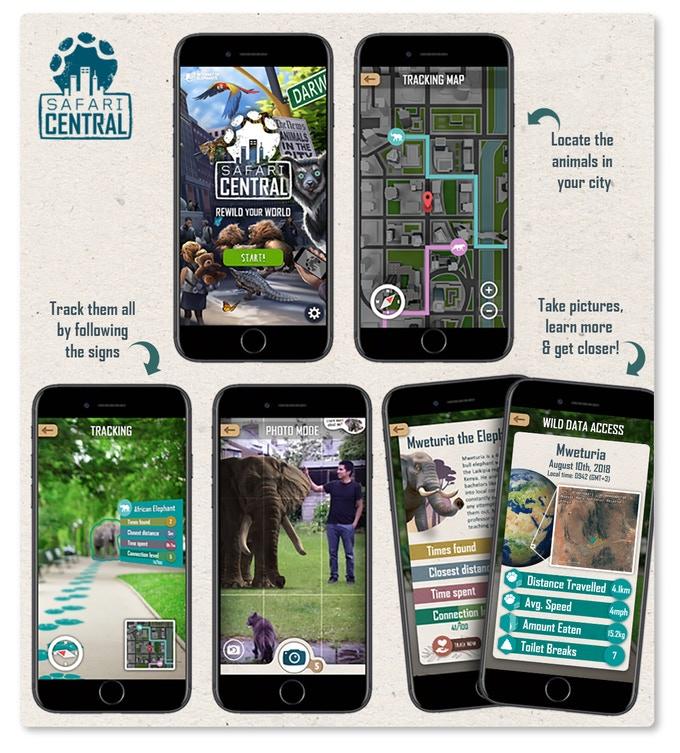

In collaboration with Little Chicken Game Company, Internet of Elephants is busy preparing an August release of its first foray into augmented reality, called Safari Central. We are partnering with 6 different conservation organizations around the world to bring 6 different animals to life in people’s phones as an initial foray into engaging audiences with wildlife and its conservation.

This article is intended to provide a little more technical detail on how that is done with a follow up piece on some of the nuances that occur when getting together animators, animal experts, and game developers to make an animal realistic.

We’re actually creating six animals right now: Beby the Indri from Madagascar, Mweturia the Elephant from Kenya, Rockstar the Temminck’s Pangolin from South Africa, Ethyl the Grizzly Bear from the USA, Atiaia the Jaguar from Brazil, and Lola the Black Rhino from Kenya. Each of these augmented reality animals are based on their actual counterparts in the wild. We’ll concentrate on how we made Lola.

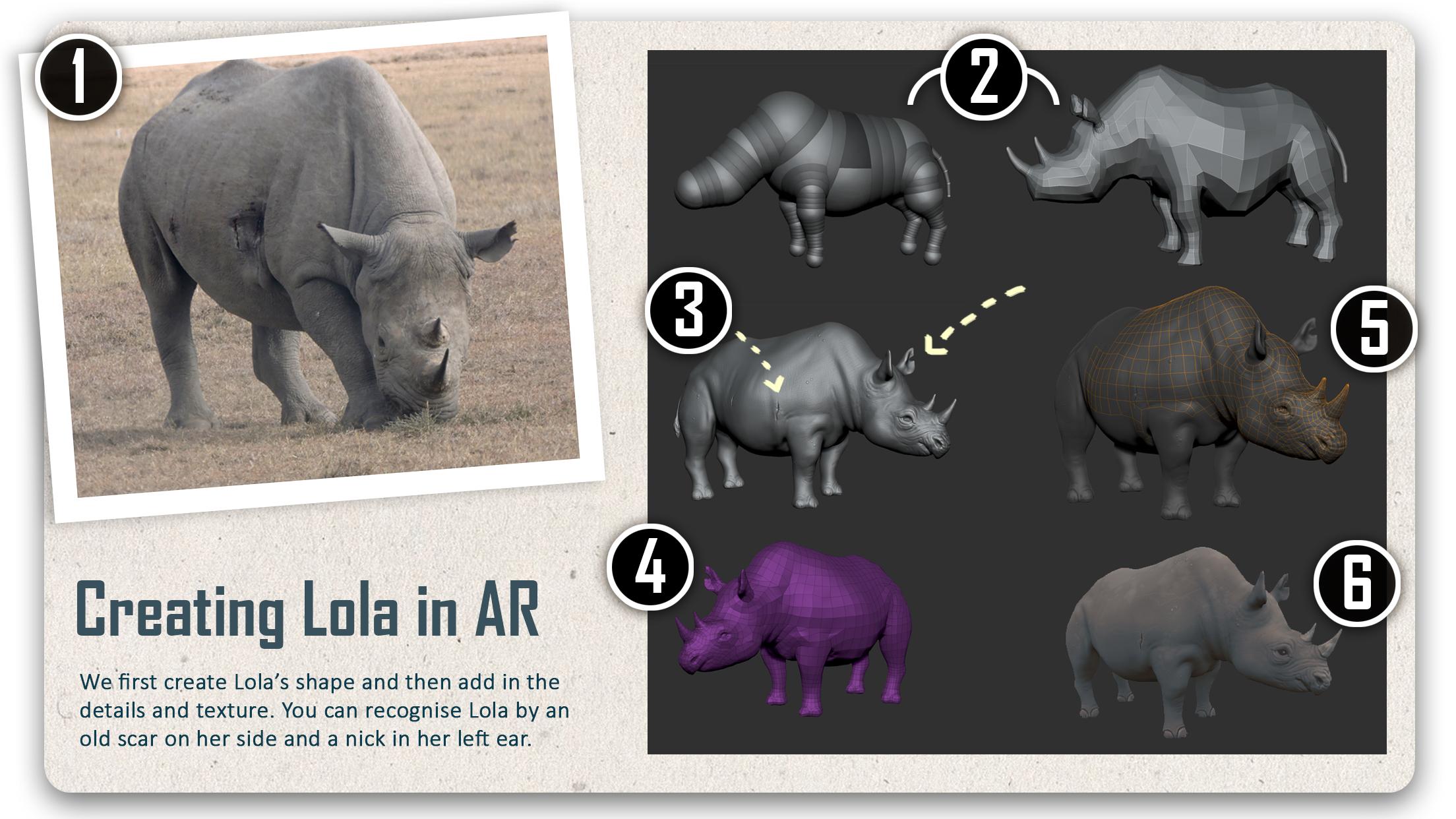

Step 1: A photo of Lola

We start by talking with our partners (in this case Ol Pejeta Conservancy) and using photographs of the animal we want to model, to discover defining characteristics of that individual. In Lola’s case the nick in her left ear, the scar on her right side and the size of her horns are the identifying features we really want to capture.

Step 2: The basic 3D shape

Just as when you're sketching a picture, we start by getting the overall shape right by just using simple 3D shapes known as primitives.

Step 3: The fine details and normal mapping

We then start sculpting into this basic shape to create photographic realism such as muscle definition, skin wrinkles and facial details. Here you see we’ve already added Lola’s distinctive scar on the right side of her body, and the nick in her left ear.

While all the details are essential for realism, they are very hard for the app to process as 3D information. So at this stage we store all that lovely detail into a normal map; a 2D representation of 3D detail, a bit like a topographical map, which our AR engine can make look 3D, without having to treat it as such.

Step 4: Low-poly!

Computers struggle to render high-detail models in real-time, so it is essential to simplify our models into a form that's easy for a computer to process. We do this by making them ‘low-poly’ – i.e. reducing them to a ‘small’ number of flat polygons, which all join together to form our overall 3D model.

Step 5: Applying the detail

The low-poly model in step 4 clearly loses loads of the lovely detail we created in step 3… and this is exactly where our normal map comes back into play! We apply the normal map back over this low-poly model, to achieve a high level of distinguishing detail from the real Lola, but in a form the computer can process without destroying your phone.

Step 6: Finishing touches

We finish the modeling process by painting the detailed skin coloring onto the model and getting the lighting just right.

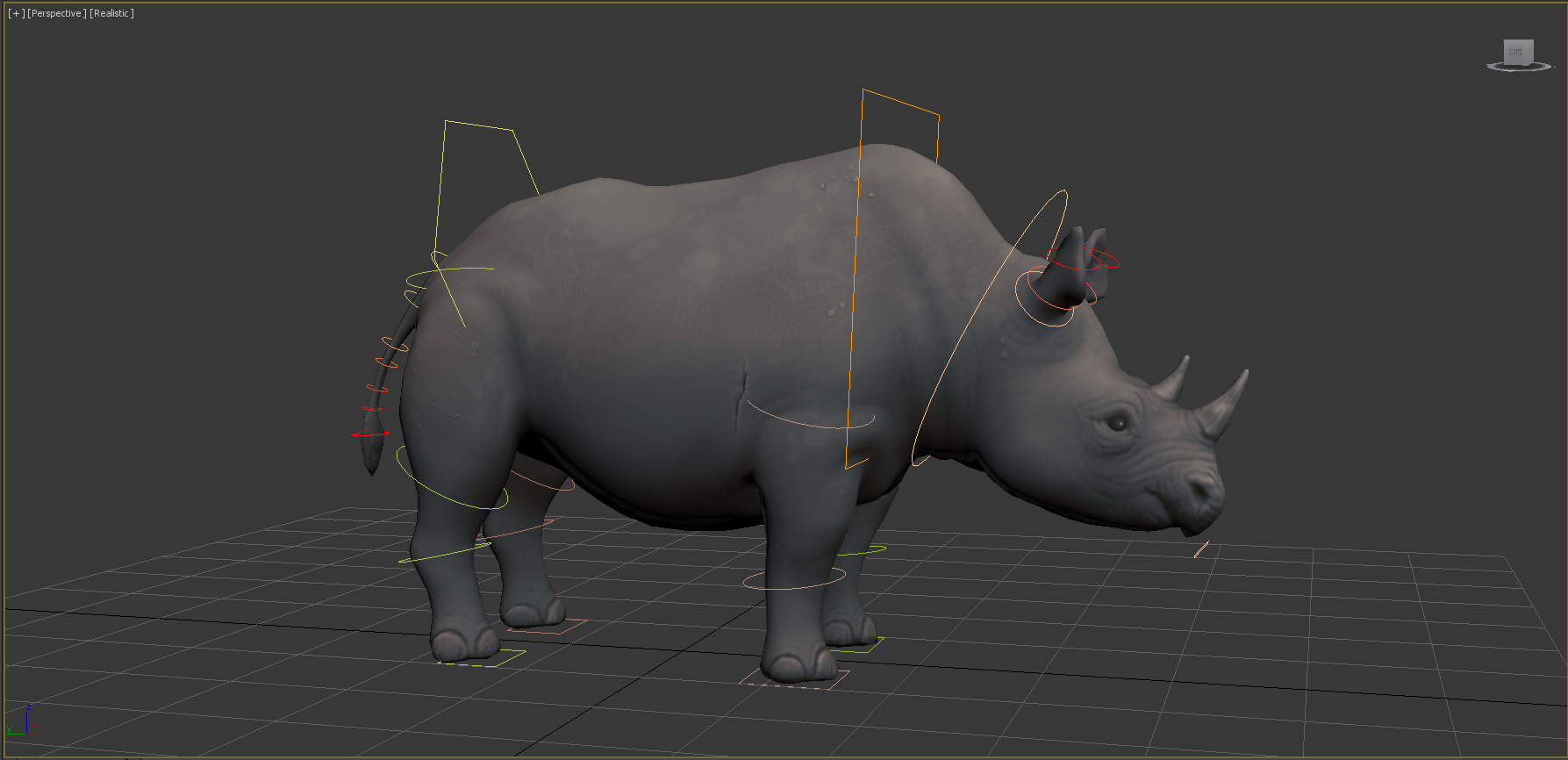

Step 7: The skeleton

Once the model is complete, it’s time to study Lola's movements so that we can bring her to life realistically. The first step here is to recreate a simplified version of her real bone structure, so that we can mimic how she moves in real life.

Step 8: Rigging

With our ‘bones’ created, we can now rig the model. A rig is a set of deceptively simple controls that the animator uses to move each part of the body independently. The lines in this image signify how each body part can move in relation to the others:

Step 9: Animation

We then go on to find footage of rhinos which give us a good indication of how a rhino moves, and use those as reference points to try and get the subtleties just right. Notice in this clip how we carefully match the animation to real footage:

Our animator Joris uses Autodesk’s 3D Studio Max, and pays special attention to subtleties such as the rhino’s independent ear movements, and the understated rotation of the head and neck as it moves around. Many of these intricacies are very hard to spot… but you certainly notice when they’re not right!

Ultimately, this entire process results in a set of animations that can be integrated into the AR engine:

Step 10: AR Integration

Now its time to take the model and bring it into augmented reality. This is a technical process, but involves a few steps to get some things working:

- We import into the AR framework that we’ve built in Unity 3D

- We link the animation states together, so our animal naturally transitions between its walking and idle states

- We light it in Unity so that it has naturalistic shadows and highlights, which change as the model walks around the virtual space.

And voila! Lola is in your phone and walking around in your world.

All of this is a lot of fun for sure, but also requires a lot of painstaking attention to detail, which we admit we don’t always get exactly right. Certain animals are much easier than others. For example, furry animals like the lemur are much harder to make realistic than thick-skinned animals like a rhino (See here how even Pixar struggle with this challenge!). And animals with complex limb movements like primates are also much harder than simpler, larger movements like an elephant.

In a follow up article, we’ll share some of the complications and the interesting conversations that come up between our animator, our animal behavior expert, and our gaming guru as well as more technical details on the limitations of what you can do within the processing capacity of a phone and less than a multi-million dollar budget (in case you want to ask why our animals don’t look as good as in “Jungle Book”.) :)

How you can get involved

The first six animals are nearly ready to be released, and Internet of Elephants is crowdfunding for the creation of the next 8 animals. If you’re interested in the possibilities of augmented reality and location based games for wildlife, please visit the site where you can also learn more about how exactly these type of applications can directly benefit the conservation of nature and place your vote on which animal we should introduce next.

About the Authors

Gautam Shah is the founder of Internet of Elephants, and quit his perfectly good job as an IT consultant of 20 years to connect the public with the animals around the world he loves so much. While not a “gamer’ himself, he believes in bringing conservation to the public on their terms, and sees games as the ultimate channel to do that.

Jake Manion is the head of product development for Internet of Elephants, and is most definitely a gamer. He has spent 20 year designing, developing, and publishing games, most recently for Aardman Animations, of Wallace and Gromit and Shaun the Sheep fame. He lives in Kenya with his wife and Edwiener.

Gautam and Jake would also like to give a shout out to Little Chicken Game Company, who do most of the heavy lifting to get all this right. They are one of the premier applied gaming companies in the world and are at the forefront of using AR and VR to engage audiences.

Add the first post in this thread.